Face Recognition in Jumper: Find anyone in your footage

Any user of Jumper knows that the visual language models (VLMs), the machine learning models that power Jumper’s visual and speech search, are impressively adept at spotting objects, scenes and other visual content in your footage. They can spot “a man holding a cup” or “people cheering” but once you start asking them to find scenes of specific people, you’ll quickly run into problems.

Celebrity recognition

In our testing, we’ve found the models can identify actual celebrities, although theres no knowing what celbrity images they’ve been trained on. Due to the blackbox nature of these models (they’re really just a big set of obscure numbers and weights) there’s no simple way of knowing what people a certain VLM can or cannot identify.

Non-celebrity recognition

Earlier this year at IBC, we spoke with a friendly editor who, it turned out, worked on Squid Game: The Challenge. For anyone who missed it, that show involves 456 contestants competing in a series of elimination games to win a big cash prize. The contestants are regular people (which means no VLM will have knowledge of them), and are filmed constantly, from multiple angles (i.e. a lot of footage). The tagging process was, as he put it, “a lot of work” which was probably a very modest way of putting it.

For editors working with casts who are not A‑list public figures, this shortfall becomes obvious very quickly. We’ve heard this from editors in corporate video, documentaries and unscripted productions (but I’d assume editors working in other genres must be facing the versions of the same problem?)

Use cases like where the internal comms team want to pull every shot of a specific executive without hunting through hours of material. Or like the example above, reality TV producers that quickly want to find every moment of a specific contestant.

Face recognition in Jumper

So after hearing enough versions of this story. And after some thinking and talking to the community we decided to add face recognition and face search to Jumper. To stay true to the offline-fully-local-no-cloud nature of Jumper, the new face recognition and search features are also 100% on-device.

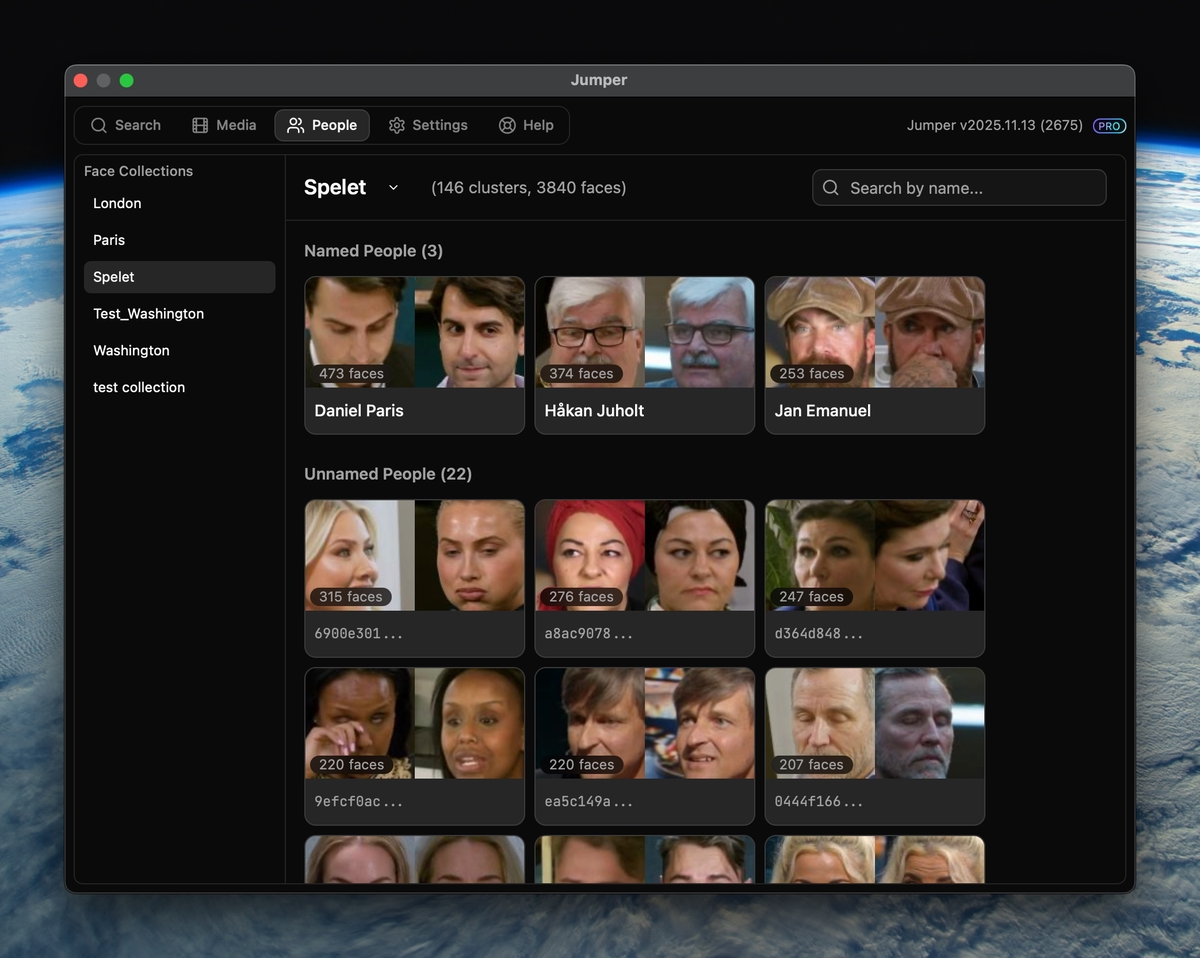

When you analyse your footage, Jumper scans through frame by frame, extracts every face it finds, and groups similar ones together. You end up with groups of faces like “351 appearances of this person” and “80 of that one”. At this stage, the model has no idea what these people are called, it just knows they’re the same person.

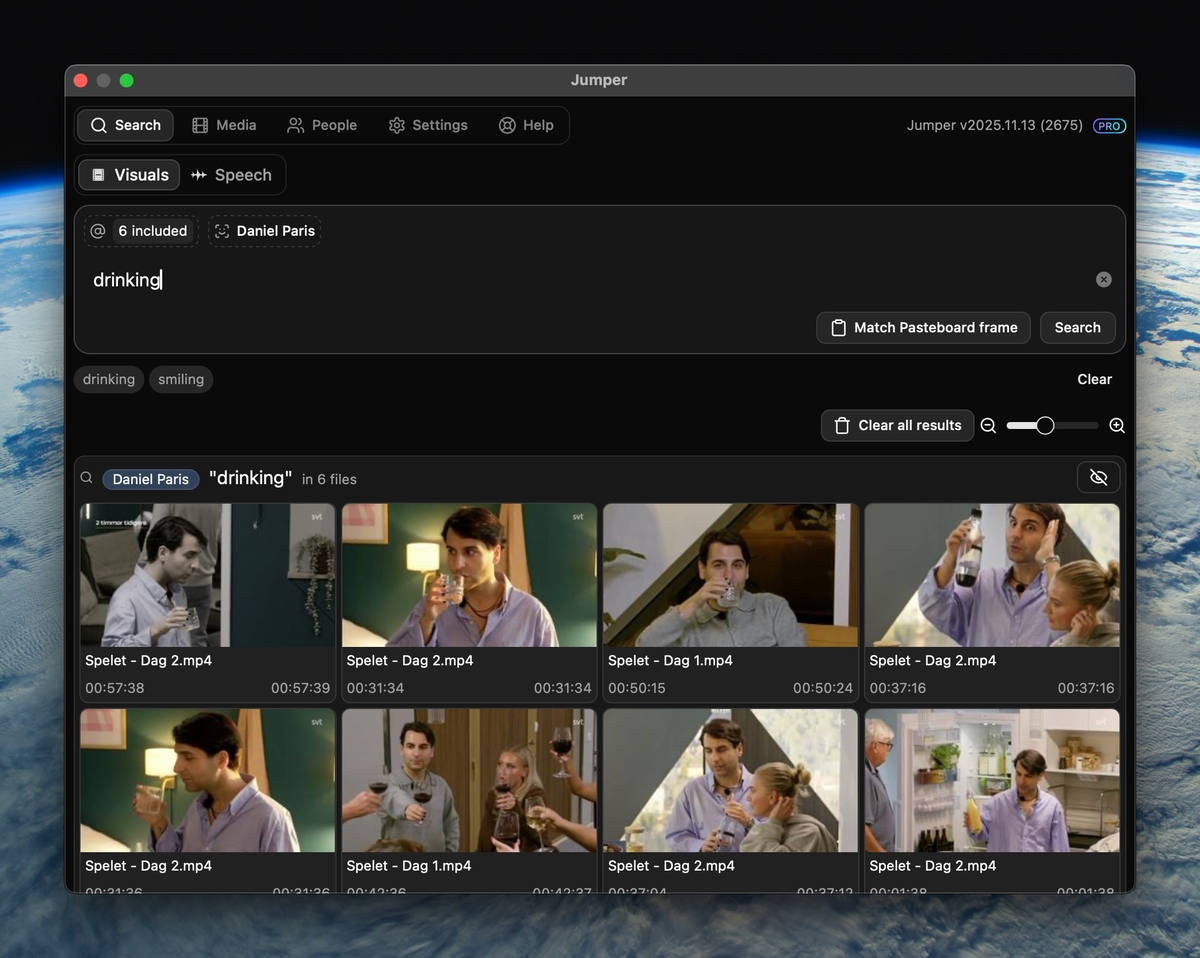

Once you’ve assigned a name to a group, that name becomes a filter in your usual search. Search for “laughing” and you get the expected assortment of happy faces. Add “Zoe” to the filter and you will now find Zoe laughing, and only Zoe. Or in the example below, we’re searching for “Daniel drinking” and we get all the shots of Daniel drinking.

What’s next?

Face recognition and people search are still in beta, but frankly it’s working even better than we first expected. And its a lot better than manually tagging people in your footage. We’re still ironing out the kinks and making sure the experience is as smooth as possible. If you want to try it on your own projects, join our Discord and we’ll give you access.